- Claps

- Top 10 Authors and Claps for their posts

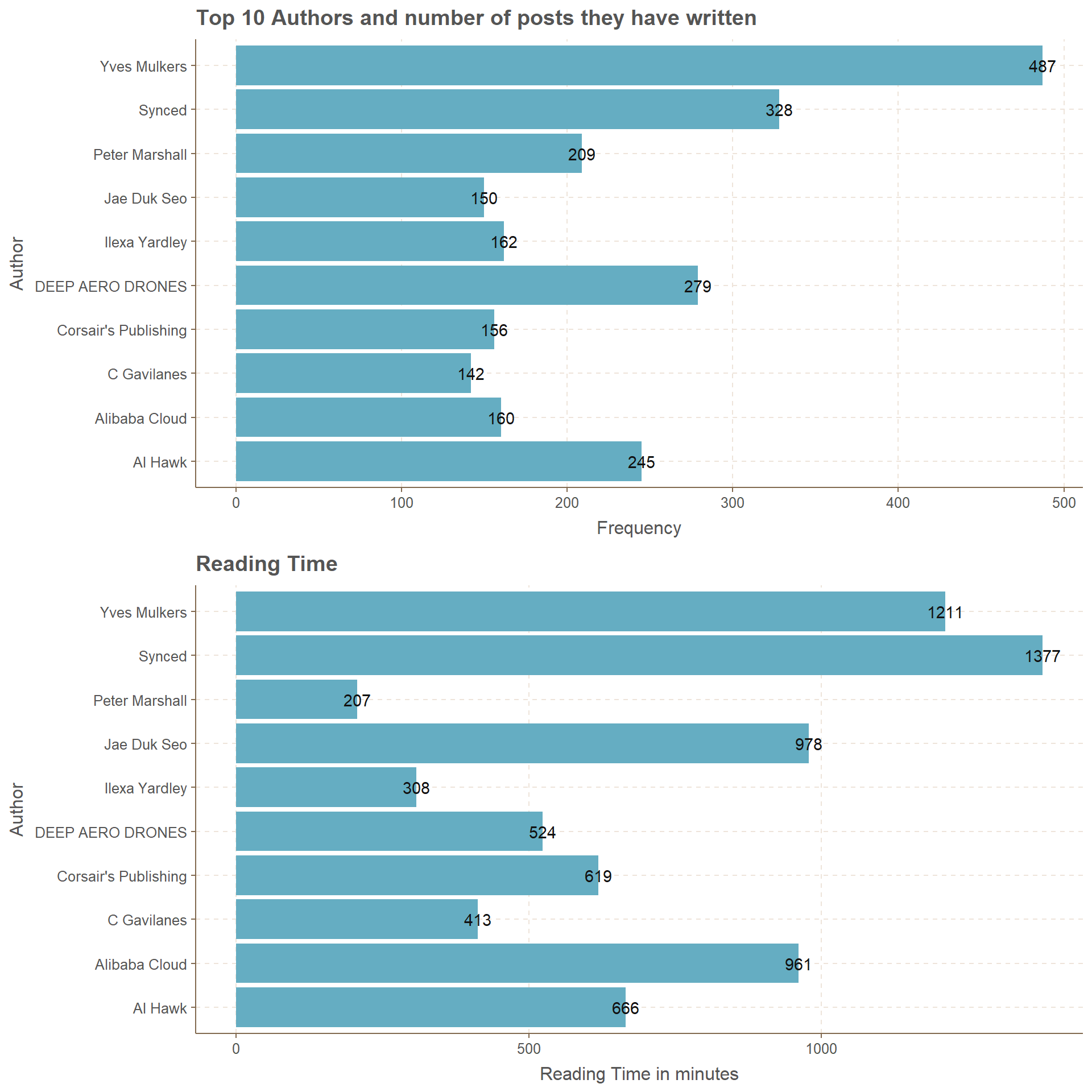

- Top 10 Authors with most posts

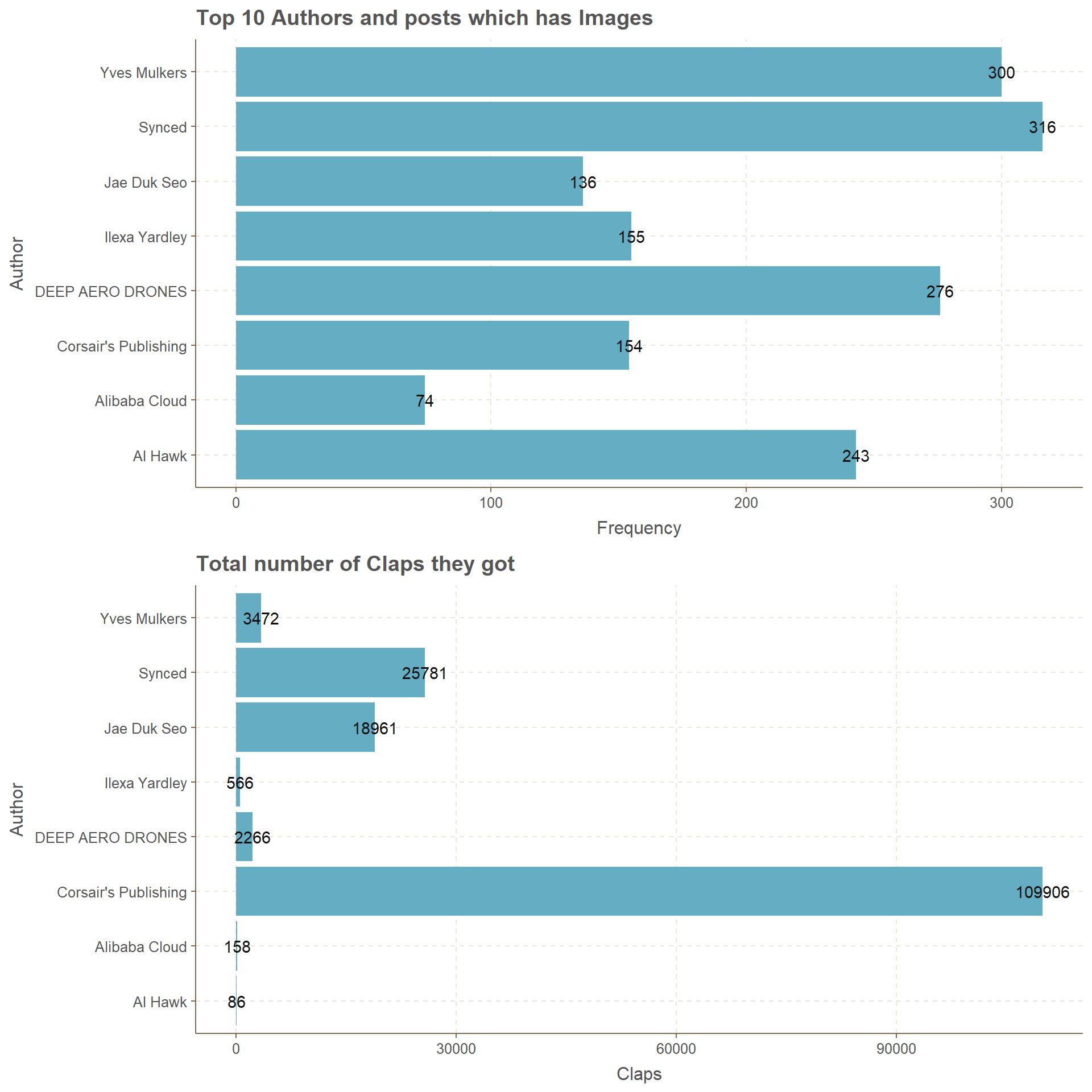

- Top 10 Authors who have posts with Images

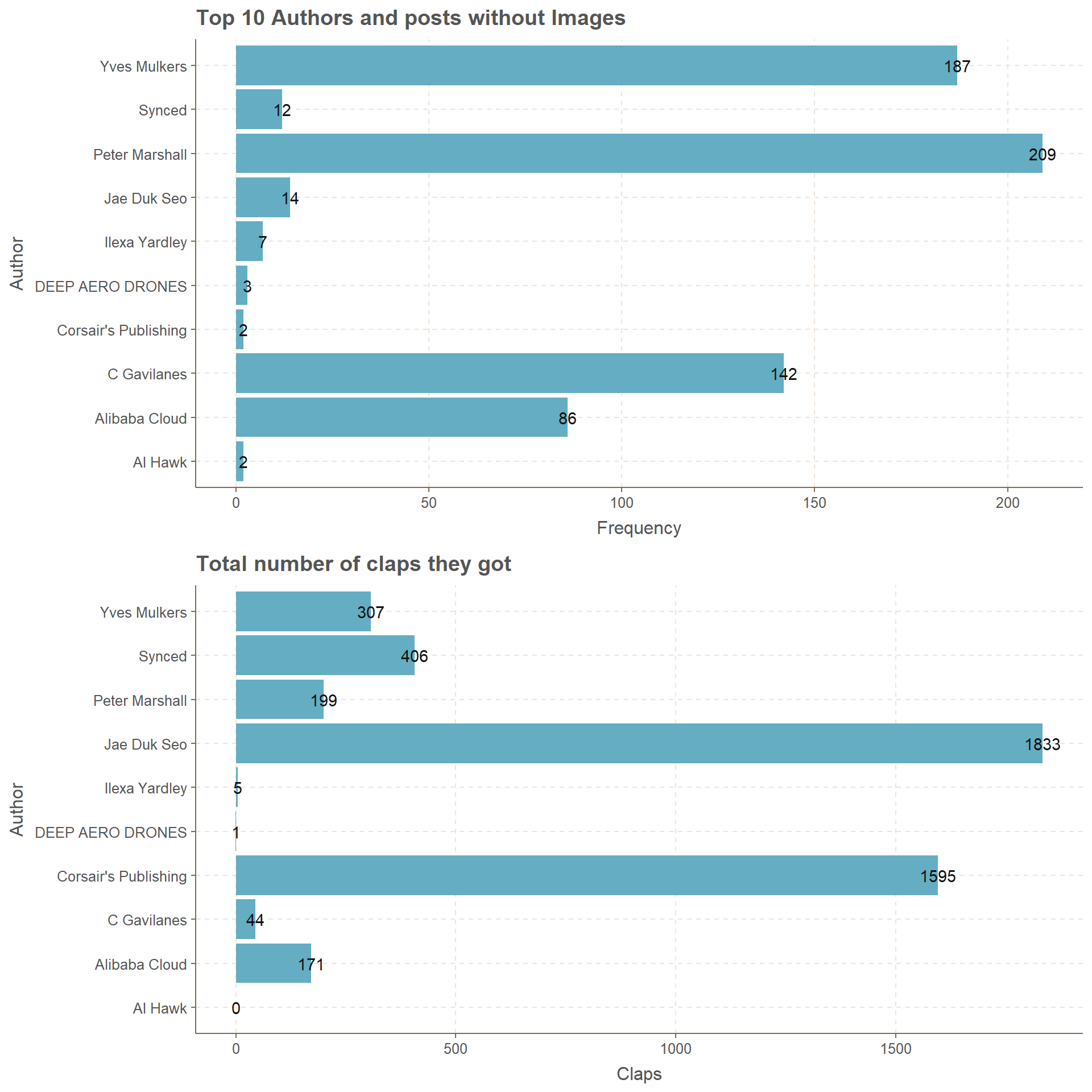

- Top 10 Authors who have posts without Images

- Top 10 Authors and Reading time for their posts

- Top 5 Publications with most posts

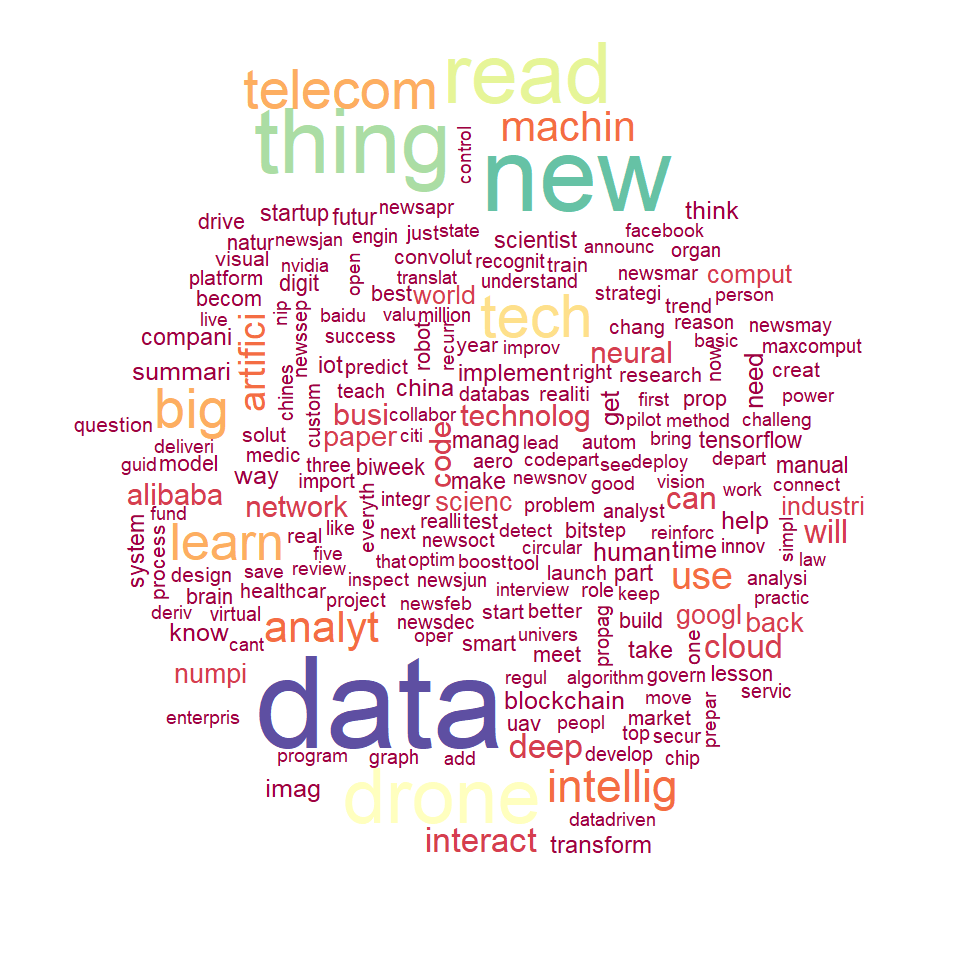

- Word Cloud for the Titles from Top 10 Authors

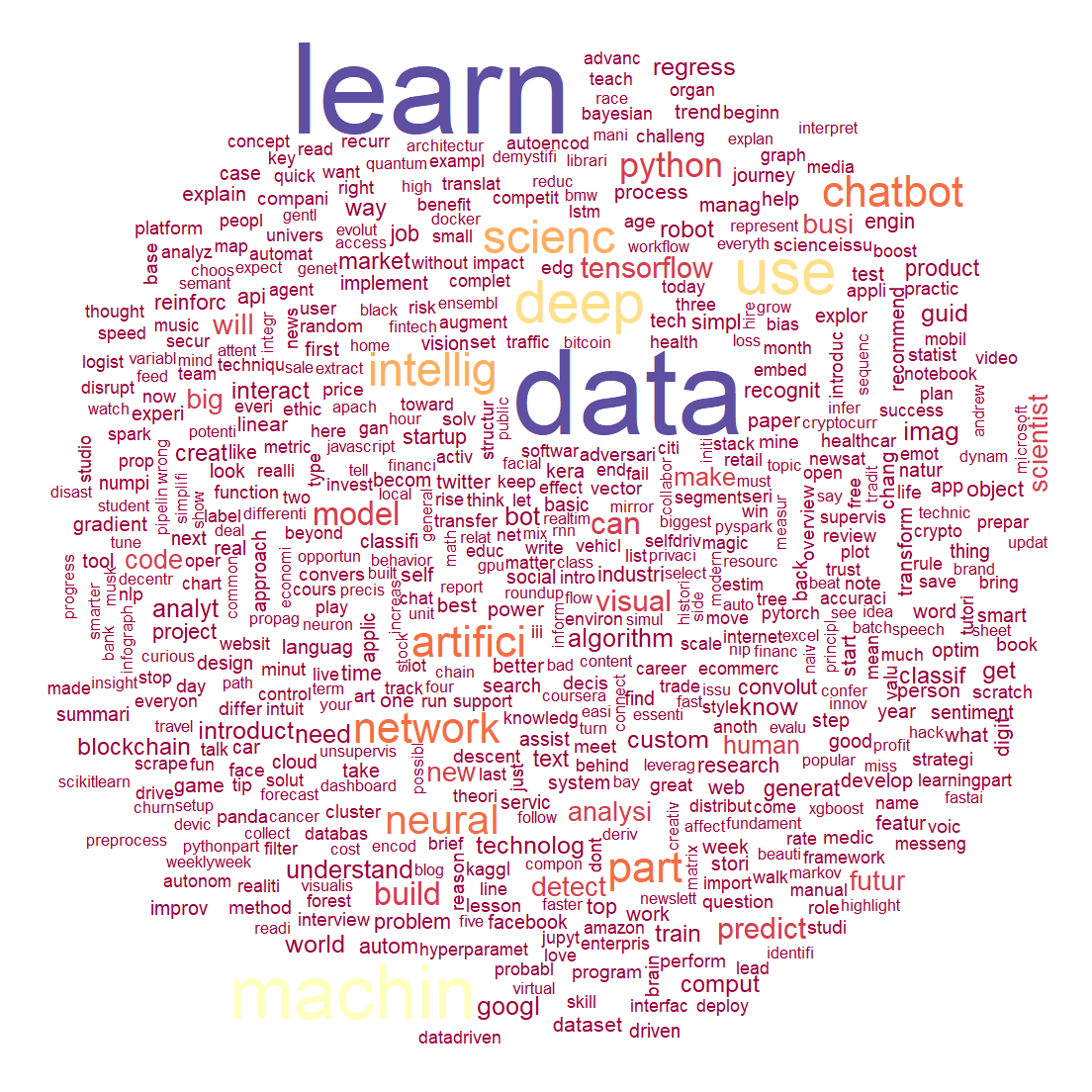

- Word Cloud for the Titles from Top 5 publications

- Conclusion

- Further Analysis

#loading packages

#load data

library(readr)

#manipulate data

library(dplyr)

library(magrittr)

# format table with expense

library(formattable)

# knitting the document

library(knitr)

# another type of table

library(kableExtra)

# playing with strings

library(stringr)

# combining two plots

library(grid)

library(gridExtra)

# that theme you wanted

library(ggthemr)

# text analysis

library(tm)

library(SnowballC)

library(RColorBrewer)

library(wordcloud)

# adding theme called fresh for plots

ggthemr("fresh")

#loading the data

medium <- read_csv("medium_datasci.csv")

attach(medium)Focusing on Claps with Authors and publications, where does writing alot of posts will get you with popularity and claps. The code will focus on Top 10 Authors with most of the posts and Claps they have received. Further, does having an image in the post matter ?. Finally, word clouds for Top 10 authors and Top 5 publications with their titles.

my take medium posts on week 36 #tidytuesday https://t.co/FTAZh0vKI8

— Amalan Mahendran (@Amalan_Con_Stat) December 8, 2018

Claps

Table indicates that 25,729 posts have 0 claps, while 7,093 posts with only one clap, and finally 3044 posts with 2 claps. The only odd one is posts with 50 claps where the count is 970.

# extracting the top 15 with most claps

claps_table<-table(claps) %>%

sort() %>%

tail(15)

# table it up

kable(t(claps_table),"html") %>%

kable_styling(bootstrap_options = "striped",full_width = T) %>%

row_spec(0,bold = T,font_size = 13,color = "grey")| 13 | 12 | 9 | 8 | 11 | 7 | 50 | 10 | 6 | 5 | 4 | 3 | 2 | 1 | 0 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 602 | 634 | 661 | 721 | 759 | 864 | 970 | 989 | 1124 | 1389 | 1402 | 2150 | 3044 | 7093 | 25729 |

Top 5 Publications with most posts

Publications with most number of posts has the highest number of claps and order achieved

in the “Top 5 publications and number of posts they have written” plot is maintained in the “Total number of Claps they got” plot as well. This simply refers, when you write alot of posts under a publication you will receive alot of claps because of the foundation these specific publications holds in Medium.

# finding who are the top 5 publications with claps

# summary.factor(medium$publication) %>%

# sort() %>%

# tail(11)

# extracting posts only from the top 5 publications with most posts

Top5_pub<-subset(medium,

publication =="Towards Data Science" |

publication == "Hacker Noon" |

publication == "Becoming Human: Artificial Intelligence Magazine" |

publication =="Chatbots Life" |

publication == "Data Driven Investor" )

# plotting the top 5 publications

g1_Top5_p<-ggplot(Top5_pub,aes(x=str_wrap(publication,15)))+

coord_flip()+ geom_bar()+

xlab("Publication")+ylab("Frequency")+

ggtitle("Top 5 Publications and number of posts they have written")+

geom_text(stat = 'count',aes(label=..count..),hjust=0.5)

# plotting the top 5 publications and their claps

g2_Top5_p<-Top5_pub[,c("publication","claps")] %>%

group_by(publication) %>%

summarise_each(funs(sum)) %>%

ggplot(.,aes(x=str_wrap(publication,15),y=claps))+

geom_bar(stat="identity")+

xlab("Publication")+ylab("Claps")+

ggtitle("Total number of Claps they got")+

coord_flip()+geom_text(aes(label=claps),hjust =0.5 )

# plotting two plots at same grid

grid.arrange(g1_Top5_p,g2_Top5_p,ncol=1)

Word Cloud for the Titles from Top 5 publications

This word cloud also has similar restrictions for number of words and minimum frequency for a word. Words such as data, learn, use, machin, network, deep, scienc and artifici have most amount of frequency. Further, words such as neural, intellig, chatbot, part and python are also with significant amount of frequency. Here we can see clearly see there can be more than 1000 words.

#convert into data frame

Top5_pub<-data.frame(Top5_pub)

# Calculate Corpus

Top5_pub.Corpus<-Corpus(VectorSource(Top5_pub$title))

#clean the data

Top5_pub.Clean<-tm_map(Top5_pub.Corpus,PlainTextDocument)

Top5_pub.Clean<-tm_map(Top5_pub.Corpus,tolower)

Top5_pub.Clean<-tm_map(Top5_pub.Clean,removeNumbers)

Top5_pub.Clean<-tm_map(Top5_pub.Clean,removeWords,stopwords("english"))

Top5_pub.Clean<-tm_map(Top5_pub.Clean,removePunctuation)

Top5_pub.Clean<-tm_map(Top5_pub.Clean,stripWhitespace)

Top5_pub.Clean<-tm_map(Top5_pub.Clean,stemDocument)

# save as png

#png(filename = "wordcloud2.png",width = 1024,height = 768)

# plot the word cloud

wordcloud(Top5_pub.Clean,max.words = 1500,min.freq = 10,

colors = brewer.pal(11,"Spectral"),random.color = FALSE,

random.order = TRUE)

Conclusion

My conclusion of the above plots and tables in point form

Using dplyr to manipulate the data-set was useful when there is complication.

grid and gridExtra packages provide a safe way of combining multiple plots into one plot.

formattable and kableExtra were crucial in generating tables which are informative.

Word cloud or analyzing text is very useful and flexible when we use the above packages.

Further Analysis

Similarly we can do the above analysis for Top 5 publications and other variables.

Word clouds for subtitles also will be interesting to see, specially focusing on authors and publications.

Please see that

This is my sixth post on the internet so my mistakes in grammar and spellings should be very less than previous posts. I intend to post more statistics related materials in the future and learn accordingly. Thank you for reading.

THANK YOU